TikTok said 94.3% of violations were removed within 24 hours.

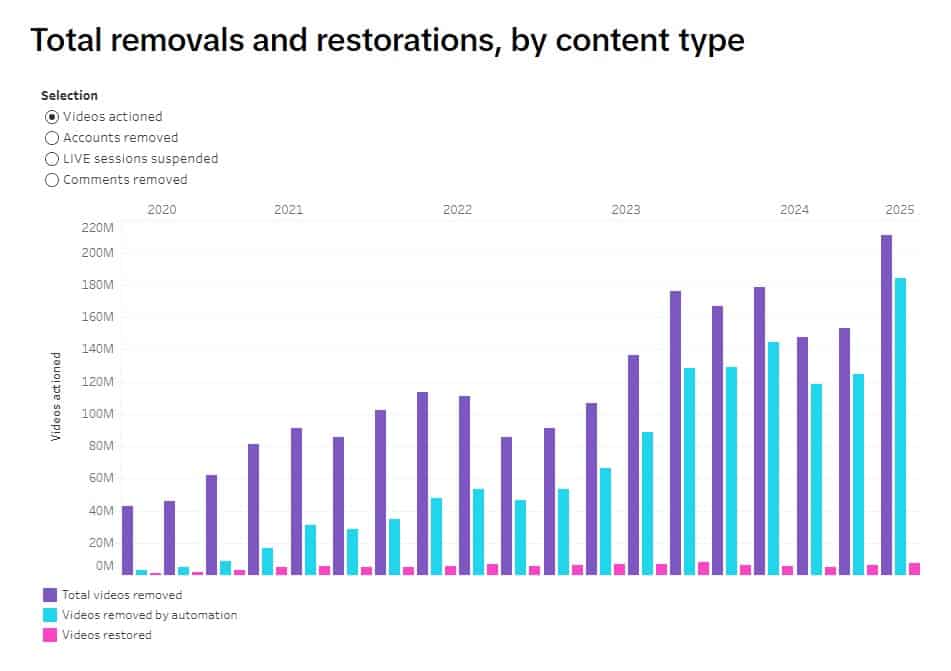

TikTok removed more than 1.1 million videos for violating community guidelines between January and March 2025.

This is a 17% increase compared to videos removed in the last three months of 2024.

This was revealed in the short-form video platform’s Q1 Community Guidelines Enforcement Report.

Content violation

According to a report revealed this week, almost all (99.6%) violating content in these first three months of the year was proactively removed before someone needed to report it.

TikTok said 94.3% of violations were removed within 24 hours.

Account removal

The report also highlights the removal of more than 107 000 accounts in the country for breaching the platform’s rules.

“With millions of videos uploaded daily and understanding that there is no finish line when it comes to safety, TikTok continuously invests in capabilities to identify and remove harmful content,” the platform said.

TikTok said over 87% of all video removals are now done through automated moderation.

TikTok Live

While TikTok Live enables creators and viewers to connect, the platform has also intensified its Live Monetisation Guidelines to clarify what content is or isn’t eligible for monetisation.

ALSO READ: TikTok’s new features makes it easier for parents to keep track of kids

According to the report, a worldwide total of 19 million live rooms were stopped this quarter, a 50% increase from the previous quarter.

“This increase shows how effective prioritisation of moderation accuracy has been, as the number of appeals remains steady amid the increase in automated moderation,” it said.

LLMs

TikTok said it also began testing large language models (LLMs) to further support proactive moderation at scale.

LLMs can comprehend human language and perform highly specific, complex tasks. This can make it possible to moderate content with high degrees of precision, consistency and speed.

“Ongoing advancements in AI and other moderation technologies can also benefit the overall well-being of content moderators by requiring them to review less content. It also provides moderators with better tools to do this critical moderation work.”

Well-being summit

In June, TikTok Africa hosted its “My Kind of TikTok Digital Well-being Summit” bringing together experts, NGOs, creators, media and industry leaders from across sub-Saharan Africa, including South Africa, to collectively explore, tackle and improve the state of digital wellbeing both on and beyond the platform.

Building on a successful pilot in Europe, TikTok is expanding in-app helpline resources to South Africa, in partnership with Childline South Africa, a non-profit organisation that works to protect children from violence and further the culture of children’s rights in the country.

This means that in the coming weeks, young users in South Africa will have access to local helplines in-app that provide expert support when reporting content related to suicide, self-harm, hate and harassment.

NOW READ: WATCH: Does Please Call Me inventor Nkosana Makate have a patent?

Support Local Journalism

Add The Citizen as a Preferred Source on Google and follow us on Google News to see more of our trusted reporting in Google News and Top Stories.